Hallucinations

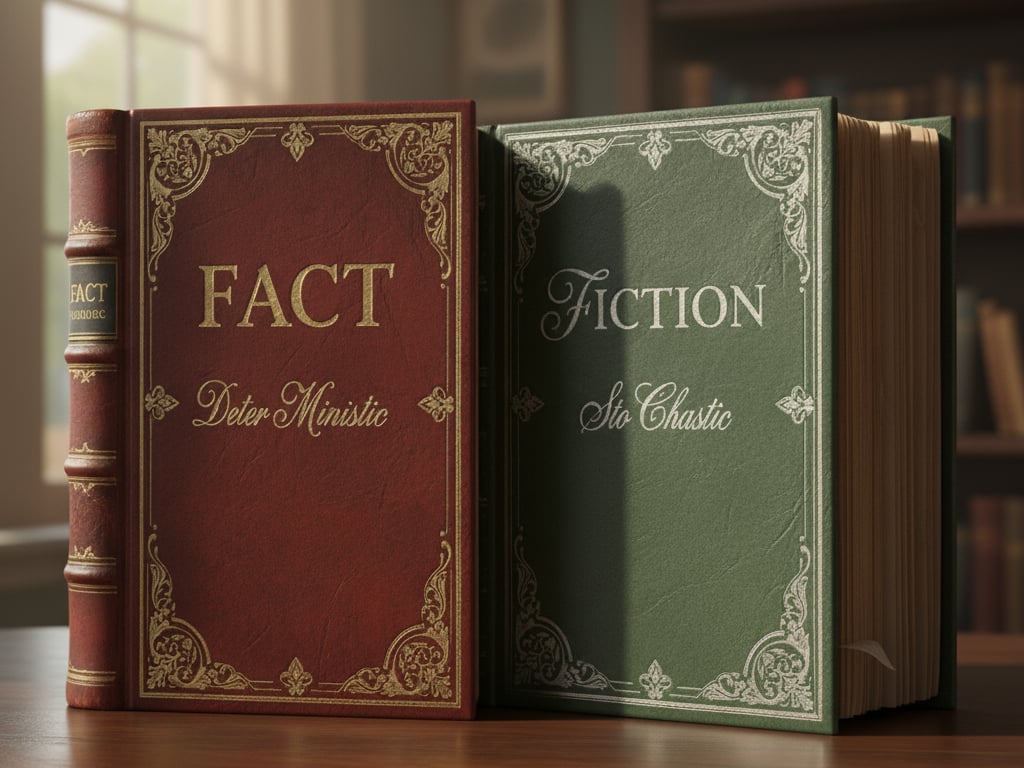

While LLMs can generate plausible but false information due to their probability-based nature, Tutello mitigates hallucination risks through multiple safeguards including transparency with users, providing reference materials, prompt engineering, human oversight, and continuous data analysis.